Object detection has undergone a dramatic and fundamental shift. I’m not talking about deep learning here – deep learning is really more about classification and specifically about feature learning. Feature learning has begun to play and will continue to play a critical role in machine vision. Arguably in a few years we’ll have a diversity of approaches for learning rich features hierarchies from large amounts of data; it’s a fascinating topic. However, as I said, this post is not about deep learning.

Rather, perhaps an even more fundamental shift has occurred in object detection: the recent crop of top detection algorithms abandons sliding windows in favor of segmentation in the detection pipeline. Yes, you heard right, segmentation!

First some evidence for my claim. Arguably the three top performing object detection systems as of the date of this post (12/2013) are the following:

- Segmentation as Selective Search++ (UvA-Euvision)

- Regionlets for Object Detection (NEC-MU)

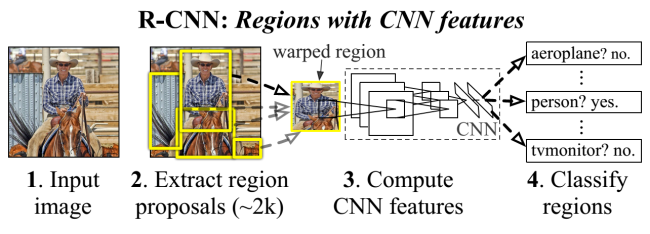

- Rich Feature Hierarchies for Accurate Object Detection (R-CNN)

The first two are the winning and second place entries on the ImageNet13 detection challenge. The winning entry (UvA-Euvision) is an unpublished extension of Koen van de Sande earlier work (hence I added the “++”). The third paper is Ross Girshick et al.’s recent work, and while Ross did not compete in the ImageNet challenge due to lack of time, his results on PASCAL are superior to the NEC-MU numbers (as far as I know no direct comparison exists between Ross’s work and the winning ImageNet entry).

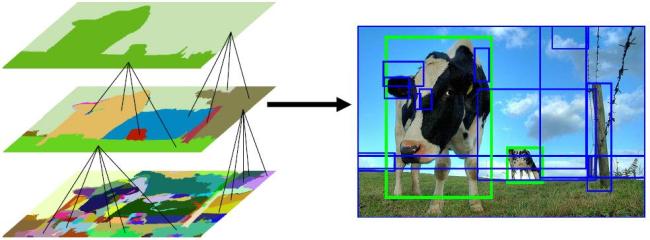

Now, here’s the kicker: all three detection algorithms shun sliding window in favor of a segmentation pre-processing step, specifically the region generation method of Koen van de Sande et al., Segmentation as Selective Search.

Now this is not your father’s approach to segmentation — there’s a number of notable differences that allows the current batch of methods to succeed whereas yesteryear’s methods failed. This is really a topic for another post, but the core idea is to generate around 1-2 thousand candidate object segments per image that with high probability coarsely capture most of the objects in the image. The candidate segments themselves may be noisy and overlapping and in general need not capture the objects perfectly. Instead they’re converted to bounding boxes and passed into various classification algorithms.

Incidentally, Segmentation as Selective Search is just one of many recent algorithms for generating candidate regions / bounding boxes (objecteness was perhaps the first), however, again this is a subject for another post…

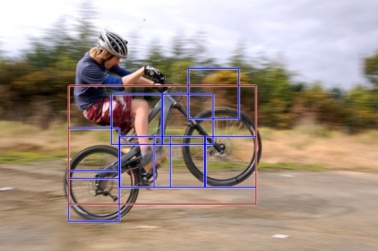

So what advantage does region generation give over sliding windows approaches? Sliding window methods perform best for objects with fixed aspect ratio (e.g., faces, pedestrians). For more general object detection a search must be performed over position, scale and aspect ratio. The resulting 4 dimensional search space is large and difficult to search over exhaustively. One way to look at deformable part models is that they perform this search efficiently, however, this places severe restrictions on the models themselves. Thus we were stuck as a community: while we and our colleagues in machine learning derived increasingly sophisticated classification machinery for various problems, for object detection we were restricted to using approaches able to handle variable aspect ratio efficiently.

The new breed of segmentation algorithms allows us to bypass the need for efficiently searching over the space of all bounding boxes and let’s us employ more sophisticated learning machinery to perform classification. The unexpected thing is they actually work!

This is a surprising development and goes counter to everything we’ve known about object detection. For the past ten years, since Viola and Jones popularized the sliding window framework, dense window classification has dominated detection benchmarks (e.g. PASCAL or Caltech Peds). While there have been other paradigms, based for example on interest points, none could match the reliability and robustness of sliding windows. Now all this has changed!

Object detectors have evolved rapidly and their accuracy has increased dramatically over the last decade. So what have we learned? A few lessons come to mind: design better features (e.g. histograms of gradients), employ efficient classification machinery (e.g. cascades), and use flexible models to handle deformation and variable aspect ratios (e.g. part based models). And of course use a sliding window paradigm.

Recent developments have shattered our collective knowledge of how to perform object detection. Take a look at this diagram from Ross’s recent work:

Observe:

sliding windows-> segmentationdesigned features-> deep learned featuresparts based models-> linear SVMs

Now ask yourself: a few years ago, would you have expected such a dramatic overturning of all our knowledge about object detection!?

Our field has jumped out of a local minima and exciting times lie ahead. I expect progress to be rapid in the new few years – it’s an amazing time to be doing research in object detection 🙂

Great post. I’d just like to point out that this style of approach can be traced back to the 2009 pascal challenge winning segmentation entry (the bonn team).

great post; to go back further in history and just as a side comment. The original GrabCut (sigraph 04) work did not use any parametric maxflow ideas. But when we did the technology transfer in 2005 into MS Office 2010+ we used paramateric maxflow to achieve better results. The result is a method which is pretty similar to CPMC. Details are explained here: http://wwwpub.zih.tu-dresden.de/~cvweb/publications/papers/2011/RotherEtAlMRFBook-GrabCut.pdf

Great post and great blog! Hope you’ll continue blogging.

Great point. CPMC helped open up he door to this style of approach and was very influential in changing people’s thinking. I really should write a blog post going into the region generation approaches in more detail :-). What’s amazing to me is just how dominant this style of approach has now become – on PASCAL 2010 [edited – originally incorrectly wrote PASCAL 2007] DPM achieve 29.6 mAP versus 43.5 for Ross’s R-CNN – that’s a 50% improvement!

PS — congrats on your move to Berkeley. I think that will be a great fit 🙂

Thanks! 🙂 i think its 48.0 vs 33.7 on 2007 but it’s the same improvement. Regarding the aspect ratio problem for sliding window, a square window would probably be fine with these more powerful features, it would just need some form of bbox regression at the end, which is already done anyway. The fact that segmentation proposals give you a silhouette may be a more significant advantage in the long run.

Thanks Piotr. Great post. I’m curious what you think about this paper:

“Beyond Hard Negative Mining: Efficient Detector Learning via Block-Circulant Decomposition”. Joao Henriques, Joao Carreira, Rui Caseiro, Jorge Batista

Click to access iccv2013.pdf

Does that offer any hope for our friends, the sliding windows? Granted, this chiefly deals with the training phase, and does little to speed up classification. So the fundamental problems you raise remain it’d seem

While I completely agree the segmentation approach is very interesting from a computation and accuracy point of view, Piotr forgot to mention that a sliding window approach (mine, aka OverFeat) obtained very similar results in the competition and won the localization task. In fact, according to the slides of the detection winners, our sliding window approach performs better if they remove their classifier trick: in this case they obtain 18.3% mAP while I get 19.4% mAP. I’ll post a paper on Arxiv soon that explains everything.

@Pierre — do share a link once you post the arXiv paper, I’ll be curious to learn more details. I wanted to clarify one thing: my guess is that most of the new methods I described here are performing well not because of the segmentation pre-processing step but *despite* it. By this I mean that given infinite computational power use of dense sliding windows at various aspect ratios would probably do better. What’s really surprising is that the segmentation pre-processing doesn’t hurt. And indeed, in the long run I think it will enable novel features explicitly capturing object shape and will eventually outperform methods that take (rectangular) windows as inputs. However, certainly there’s lots of unanswered questions and it will be exciting to see the direction things go!

Thanks Piotr. for this great post. Maybe let me supplement the OverFeat paper’s link:

http://arxiv.org/abs/1312.6229

Yes thank you. An update to that paper is coming up with the next Arxiv release, where I report a new detection state of the art of 24.3% mAP. I will also be releasing a feature extractor at that time.

@pdollar – It is not necessarily true that the mentioned methods work well despite the segmentation pre-processing: Such pre-processing step also discards many unlikely object locations, effectively reducing the possible variations of visual features, which in turn makes the learning problem easier. Our journal version of Selective Search hints at this for Bag-of-Visual words features as we show that the selective search windows yield slightly higher accuracy than the exhaustive search, despite marginally better object locations for exhaustive search (last part of section 5.4 http://www.huppelen.nl/publications/selectiveSearchDraft.pdf ).

p.s. great post.

Great post and discussions. I second the idea that the learning problem is made easier when the learner doesn’t have to deal with low-quality hypotheses. In some of our early tests (the Bonn VOC team) with the multiple CPMC segment hypotheses on PASCAL we also discovered that the accuracy would drop when more segments are used in training.

Click to access IJCV_12sequential.pdf

Of course that was still kernel SVM, with a limited number of training images (as of PASCAL 10). Later with bigger training sets we found more segments start to help more. That hints the “simpler learning problem” effect could be more significant with limited training data.

Sry, figure 9 in that paper.

I already posted that on G+, but I guess it is interesting to post it at the source itself. This type of approaches is very similar to what is done by Clément Farabet

http://hal.archives-ouvertes.fr/hal-00742077

on a different set of databases, also with deep learning approach.

Nice text and good problem localization, but i think there is anothter way to create smart hypothesis of object, not only

“sliding windows -> segmentation”. For example Object class recognition by unsupervised scale-invariant learning by Zisserman.

I think segmentation is slow for industrial(I read about good hardware speedup of semantic segmentation by Clément Farabet and Eugenio Culurciello, but i think industrial needs faster solution), and i think future of detection based on good feature points.

This is interesting. The early use of segmentation has a parallel in the visual cortex, where border ownership is assigned early-on, within 20-30 milliseconds after the visual information arrives (see Williford & von der Heydt, Border ownership coding. Scholarpedia 2013). This is before object recognition in infero-temporal cortex even begins. The assignment of border ownership implies some kind of object representation, and there is more experimental evidence for that, for example, persistence and short-term memory.

Thanks, fantastic read: Border-ownership coding

I am grateful that others continue to take their moments to make meaningful blogposts.